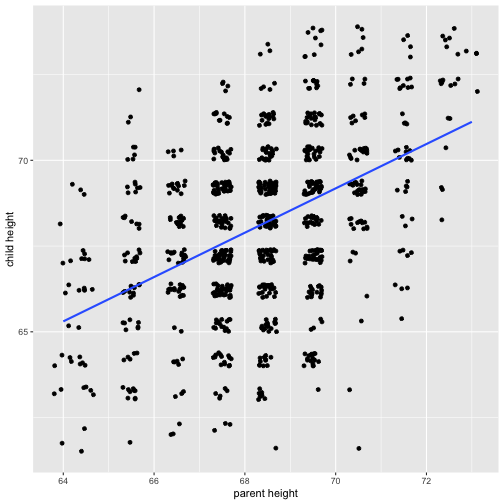

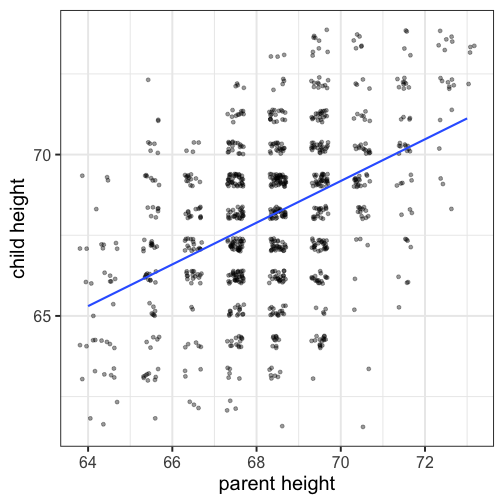

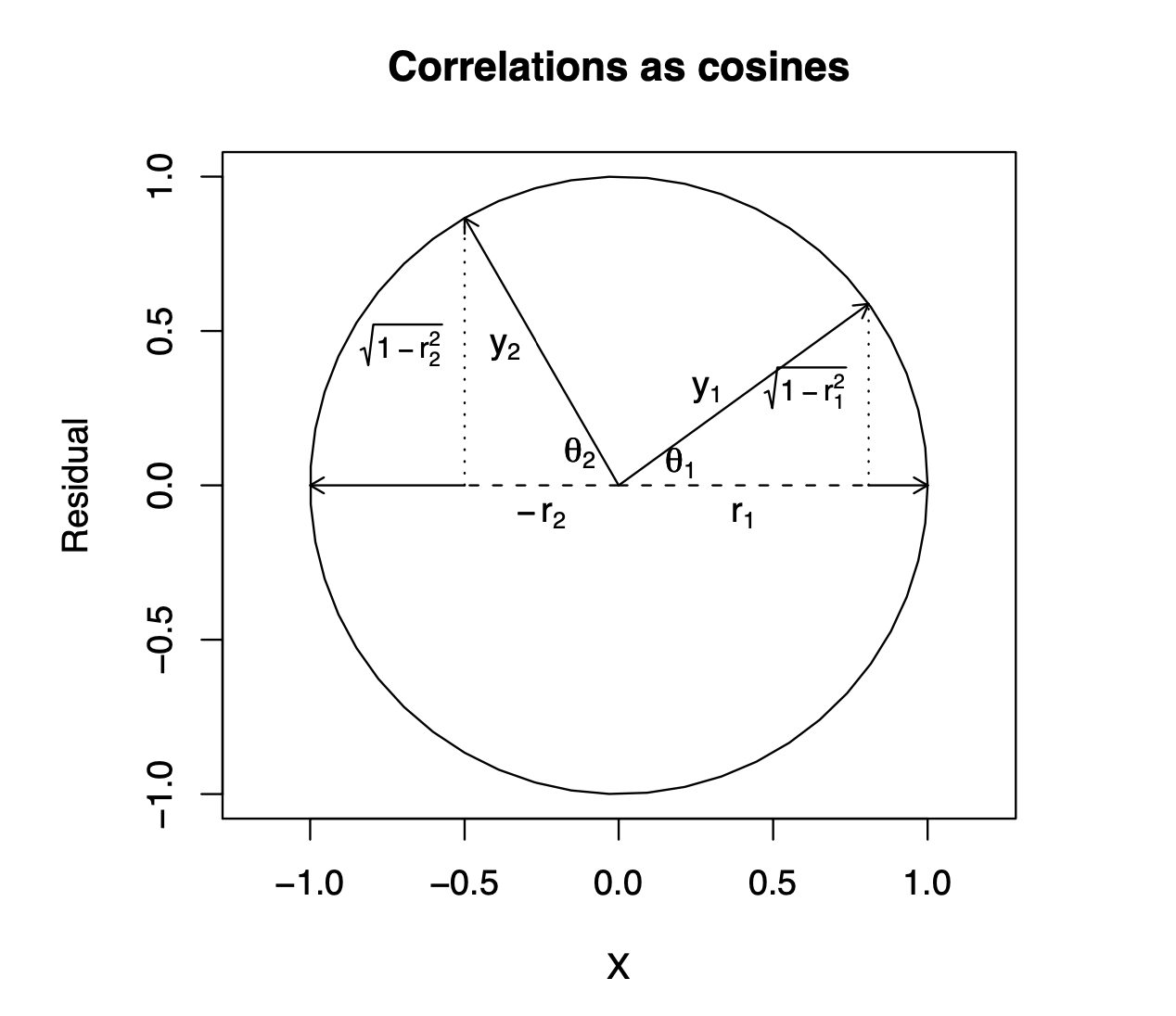

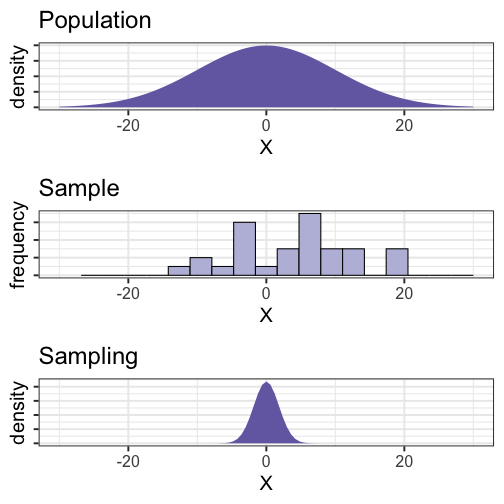

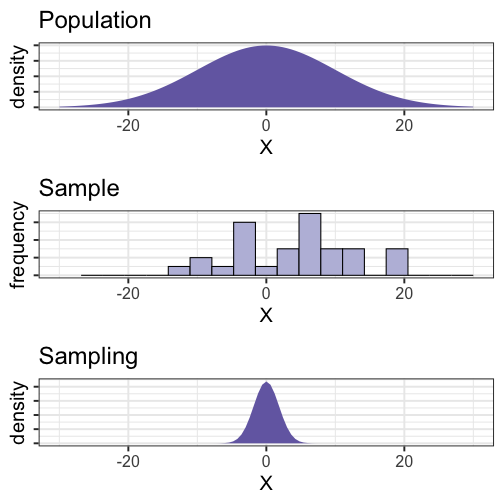

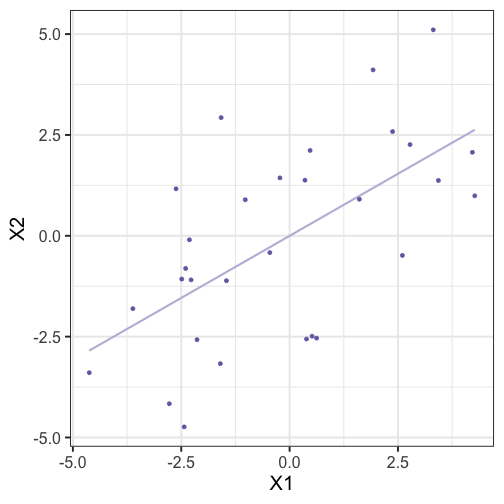

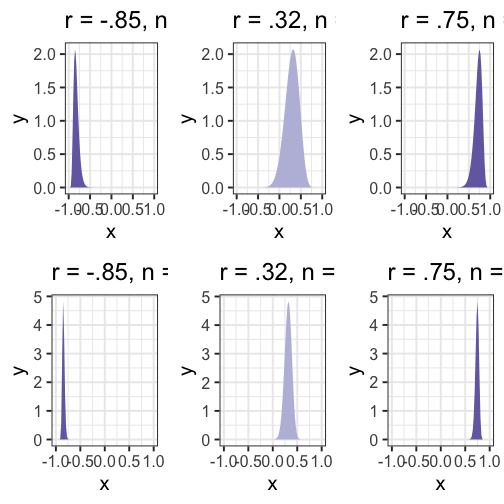

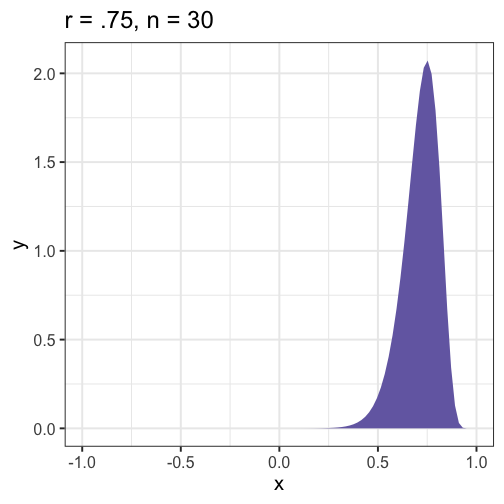

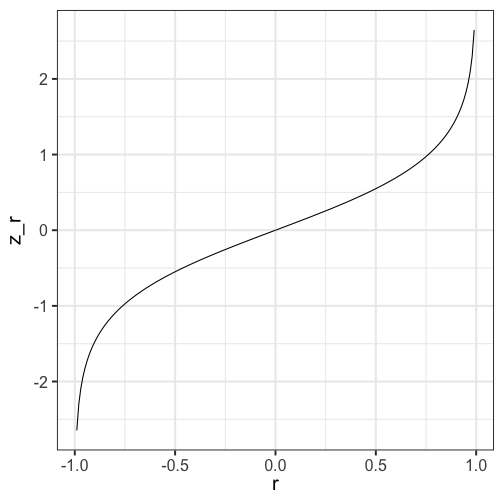

class: center, middle, inverse, title-slide # Psy 612: Data Analysis II --- ## Welcome back! **Last term:** - Probability, sampling, hypothesis testing - Descriptive statistics - A little matrix algebra **This term:** - Model building -- - Correlations - Linear regression - General linear model - Multiple regresesion --- ## Website [UOpsych.github.io/psy612/](uopsych.github.io/psy612/) Structure of this course: - Lectures, Labs, Reading - Weekly quizzes (T/F) - Homework assignments (2) - Final project (1) If you took PSY 611 in Fall 2018 or earlier, take a look at the materials for last term: [UOpsych.github.io/psy611/](uopsych.github.io/psy611/) --- ## Relationships - What is the relationship between IV and DV? - Measuring relationships depend on type of measurement - You have primarily been working wtih categorical IVs (*t*-test, chi-square) --- ## Scatter Plot with best fit line <!-- --> --- ## Review of Dispersion Variation (sum of squares) `$$SS = {\sum{(x-\bar{x})^2}}$$` `$$SS = {\sum{(x-\mu)^2}}$$` --- ## Review of Dispersion Variance `$$\large s^{2} = {\frac{\sum{(x-\bar{x})^2}}{N-1}}$$` `$$\large\sigma^{2} = {\frac{\sum{(x-\mu)^2}}{N}}$$` --- ## Review of Dispersion Standard Deviation `$$\large s = \sqrt{\frac{\sum{(x-\bar{x})^2}}{N-1}}$$` `$$\large\sigma = \sqrt{\frac{\sum{(x-\mu)^2}}{N}}$$` --- class: center Formula for standard error of the mean? -- `$$\sigma_M = \frac{\sigma}{\sqrt{N}}$$` `$$\sigma_M = \frac{\hat{s}}{\sqrt{N}}$$` --- ## Associations - i.e., relationships - to look at continuous variable associations we need to think in terms of how variables relate to one another --- ## Associations Covariation (cross products) **Sample:** `$$\large SS = {\sum{(x-\bar{x})(y-\bar{y})}}$$` **Population:** `$$SS = {\sum{{(x-\mu_{x}})(y-\mu_{y})}}$$` --- ## Associations Covariance **Sample:** `$$\large cov_{xy} = {\frac{\sum{(x-\bar{x})(y-\bar{y})}}{N-1}}$$` **Population:** `$$\large \sigma_{xy}^{2} = {\frac{\sum{(x-\mu_{x})(y-\mu_{y})}}{N}}$$` -- >- Covariance matrix is basis for many analyses >- What are some issues that may arise when comparing covariances? --- ## Associations Correlations **Sample:** `$$\large r_{xy} = {\frac{\sum({z_{x}z_{y})}}{N}}$$` **Population:** `$$\large \rho_{xy} = {\frac{cov(X,Y)}{\sigma_{x}\sigma_{y}}}$$` Many other formulas exist for specific types of data, these were more helpful when we computed everything by hand (more on this later). --- ## Correlations - How much two variables are linearly related - -1 to 1 - Invariant to changes in mean or scaling - Most common (and basic) effect size measure - Will use to build our regression model --- ## Correlations <!-- --> --- ## Conceptually Ways to think about a correlation: * How two vectors of numbers co-relate * Product of z-scores + Mathematically, it is * The average squared distance between two vectors in the same space * The cosine of the angle between Y and the projected Y from X `\((\hat{Y})\)`. ---  --- ## Statistical test Hypothesis testing `$$\large H_{0}: \rho_{xy} = 0$$` `$$\large H_{A}: \rho_{xy} \neq 0$$` Assumes: - Observations are independent - Symmetric bivariate distribution (joint probability distribution) --- Univariate distributions <!-- --> --- ### Population <!-- --> --- ### Sample <!-- --> --- ### Sampling distribution? -- The sampling distribution we use depends on our null hypothesis. -- If our null hypothesis is the nil `\((\rho = 0)\)` , then we can use a ***t*-distribution** to estimate the statistical significance of a correlation. --- ## Statistical test Test statistic `$$\large t = {\frac{r}{SE_{r}}}$$` -- .pull-left[ `$$\large SE_r = \sqrt{\frac{1-r^2}{N-2}}$$` `$$\large t = {\frac{r}{\sqrt{\frac{1-r^{2}}{N-2}}}}$$` ] -- .pull-right[ `$$\large DF = N-2$$` ] --- ## Power calculations ```r library(pwr) pwr.r.test(n = , r = .1, sig.level = .05 , power = .8) ``` ``` ## ## approximate correlation power calculation (arctangh transformation) ## ## n = 781.7516 ## r = 0.1 ## sig.level = 0.05 ## power = 0.8 ## alternative = two.sided ``` ```r pwr.r.test(n = , r = .3, sig.level = .05 , power = .8) ``` ``` ## ## approximate correlation power calculation (arctangh transformation) ## ## n = 84.07364 ## r = 0.3 ## sig.level = 0.05 ## power = 0.8 ## alternative = two.sided ``` --- ## Power calculations - But what is your confidence? - N = 84 gives you CI[.09, .48] - Schönbrodt & Perugini (2013) suggest correlations 'stabilize' at 250+ regardless of effect size - CI[.19, .39] --- ## Fisher's r to z' transformation .left-column[ If we want to make calculations based on `\(\rho \neq 0\)` then we will run into a skewed sampling distribution. ] <!-- --> --- ## Fisher's r to z' transformation - Skewed sampling distribution will rear its head when: * `\(H_{0}: \rho \neq 0\)` * Calculating confidence intervals * Testing two correlations against one another --- <!-- --> --- ## Fisher’s r to z’ transformation - r to z': `$$\large z^{'} = {\frac{1}{2}}ln{\frac{1+r}{1-r}}$$` --- ## Fisher’s r to z’ transformation <!-- --> --- ## Steps for computing confidence interval 1. Transform r into z' 2. Compute CI as you normally would using z' 3. revert back to r $$ SE_z = \frac{1}{\sqrt{N-3}}$$ `$$\large r = {\frac{e^{2z'}-1}{e^{2z'}+1}}$$` --- ### Example In a sample of 42 students, you calculate a correlation of 0.44 between hours spent outside on Saturday and self-rated health. What is the precision of your estimate? .pull-left[ `$$z = {\frac{1}{2}}ln{\frac{1+.44}{1-.44}} = 0.47$$` `$$SE_z = \frac{1}{\sqrt{42-3}} = 0.16$$` `$$CI_{Z_{LB}} = 0.47-(2.021)0.16 = 0.15$$` `$$CI_{Z_{UB}} = 0.47+(2.021)0.16 = 0.8$$` ] --- `$$CI_{r_{LB}} = {\frac{e^{2(0.15)}-1}{e^{2(0.15)}+1}} = 0.15$$` `$$CI_{r_{UB}} = = {\frac{e^{2(0.8)}-1}{e^{2(0.8)}+1}} = 0.66$$` --- ## Comparing two correlations Again, we use the Fisher’s r to z’ transformation. Here, we're transforming the correlations into z's, then using the difference between z's to calculate the test statistic. `$$Z = \frac{z_1^{'}- z_2^{'}}{se_{z_1-z_2}}$$` `$$se_{z_1-z_2} = \sqrt{se_{z_1}+se_{z_2}} = \sqrt{\frac{1}{n_1-3}+\frac{1}{n_2-3}}$$` --- ## Example Replication of Hill et al. (2012) where they found that the correlation between narcissism and happiness was greater for young adults compared to older adults .pull-left[ ### Young adults `$$N = 327$$` `$$r = .402$$` ] .pull-right[ ### Older adults `$$N = 273$$` `$$r = .283$$` ] `$$H_0:\rho_1 = \rho_2$$` `$$H_1:\rho_1 \neq \rho_2$$` --- `$$z_1^{'} = {\frac{1}{2}}ln{\frac{1+.402}{1-.402}} = 0.426$$` `$$z_2^{'} = {\frac{1}{2}}ln{\frac{1+.283}{1-.283}} = 0.291$$` `$$se_{z_1-z_2} = \sqrt{\frac{1}{327-3}+\frac{1}{273-3}} = 0.082$$` `$$\text{Test statistic} = \frac{z_1^{'}-z_2^{'}}{se_{z_1-z_2}} = \frac{0.426- 0.291}{0.082} = 1.639$$` ```r pnorm(abs(zstat), lower.tail = F)*2 ``` ``` ## [1] 0.1011256 ``` --- ## Effect size - The strength of relationship between two variables - `\(\eta^2\)`, cohen’s d, cohen’s f, hedges g, `\(R^2\)` , Risk-ratio, etc - Significance is a function of effect size and sample size - Statistical significance `\(\neq\)` practical significance --- ## Effect size How big is practical? - Cohen (.1, .3., .5) - Meyer & Hemphill .3 is average - Rosenthaul: Drug TX? | Alive | Dead ----------|-------|-------- Treatment | 65 | 35 No Tx | 35 | 65 --- ## What is the size of the correlation? - Chemotherapy and breast cancer survival? - Batting ability and hit success on a single at bat? - Antihistamine use and reduced sneezing/runny nose? - Combat exposure and PTSD? - Ibuprofen on pain reduction? - Gender and weight? - Therapy and well being? - Observer ratings of attractiveness? - Gender and arm strength? - Nearness to equator and daily temperature for U.S.? --- ## What is the size of the correlation? - Chemotherapy and breast cancer survival? (.03) - Batting ability and hit success on a single at bat? (.06) - Antihistamine use and reduced sneezing/runny nose? (.11) - Combat exposure and PTSD? (.11) - Ibuprofen on pain reduction? (.14) - Gender and weight? (.26) - Therapy and well being? (.32) - Observer ratings of attractiveness? (.39) - Gender and arm strength? (.55) - Nearness to equator and daily temperature for U.S.? (.60) --- ## Questions to ask yourself: - What is your N? - What is the typical effect size in the field? - Study design? - What is your DV? - Importance? - Same method as IV (method variance)? --- class: inverse ## Next time... More correlations