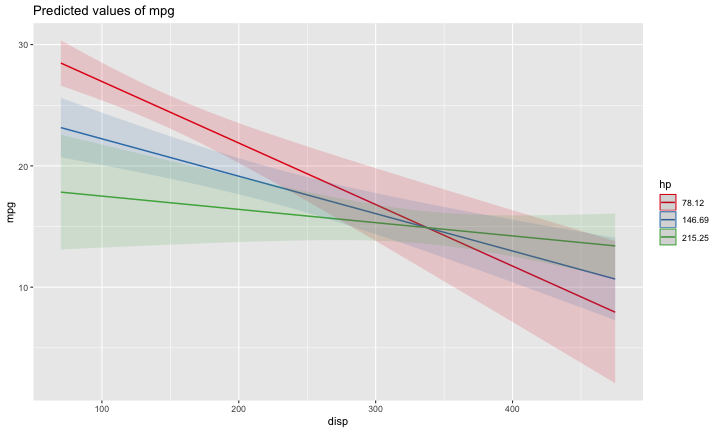

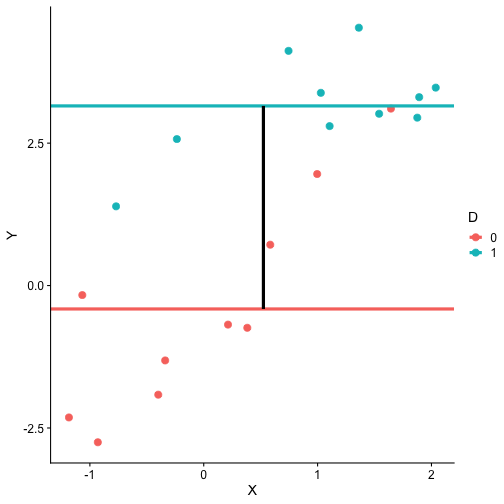

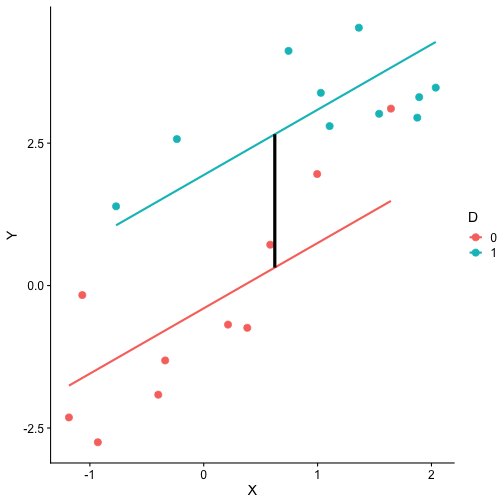

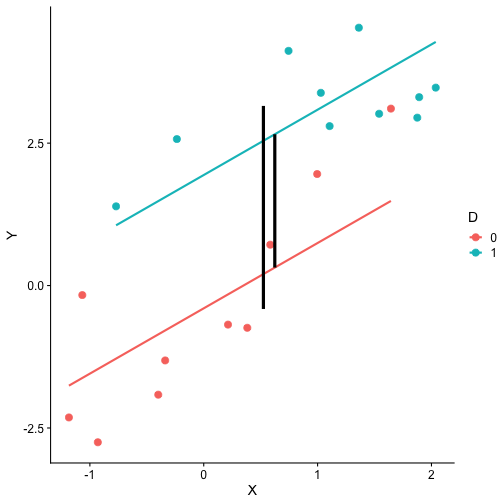

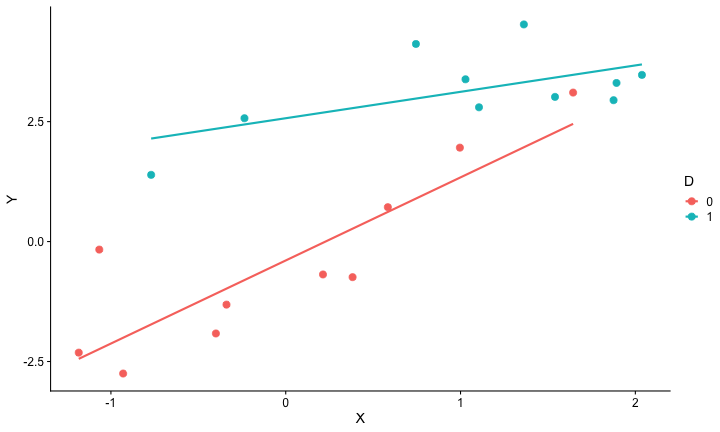

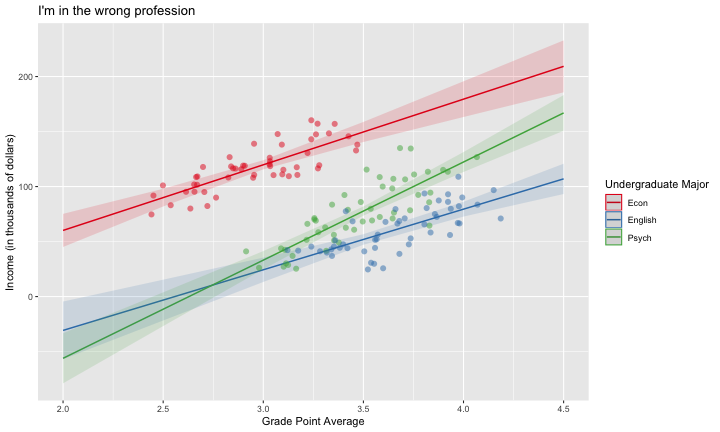

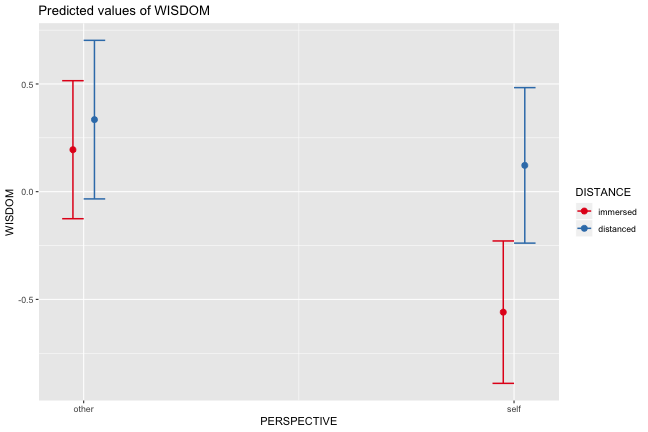

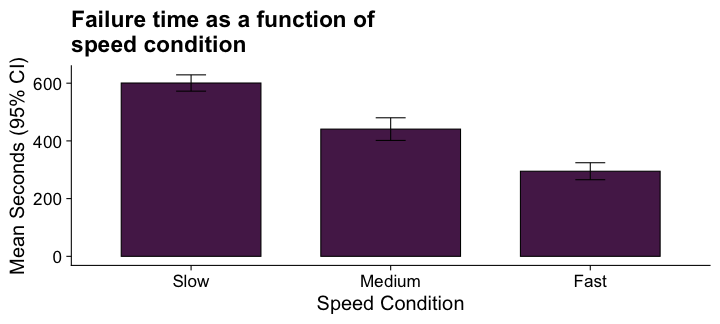

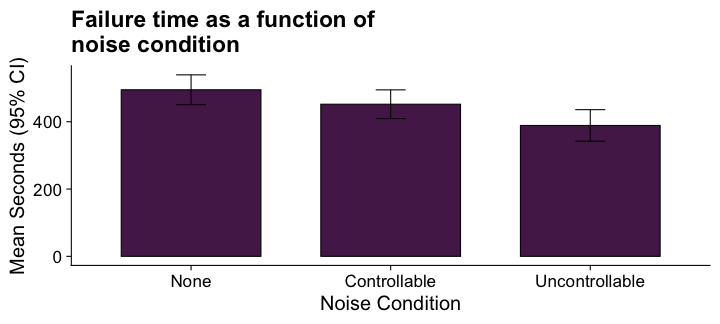

class: center, middle, inverse, title-slide # Interactions (II) --- ## Last time... Introduction to interactions with two continuous predictors --- ### Recap We use interaction terms to test the hypothesis that the relationship between X and Y changes as a function of Z. - social support buffers the effect of anxiety and stress - conscientiousness predicts better health for affluent individuals and worse health for non-affluent individuals The interaction term represents how much the slope of X changes as you increase on Z, and also how much the slope of Z changes as you increase on X. Interactions are symmetric. --- ### Recap: Output ```r cars_model = lm(mpg ~ disp*hp, data = mtcars) summary(cars_model) ``` ``` ## ## Call: ## lm(formula = mpg ~ disp * hp, data = mtcars) ## ## Residuals: ## Min 1Q Median 3Q Max ## -3.5153 -1.6315 -0.6346 0.9038 5.7030 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 3.967e+01 2.914e+00 13.614 7.18e-14 *** ## disp -7.337e-02 1.439e-02 -5.100 2.11e-05 *** ## hp -9.789e-02 2.474e-02 -3.956 0.000473 *** ## disp:hp 2.900e-04 8.694e-05 3.336 0.002407 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 2.692 on 28 degrees of freedom ## Multiple R-squared: 0.8198, Adjusted R-squared: 0.8005 ## F-statistic: 42.48 on 3 and 28 DF, p-value: 1.499e-10 ``` --- ### Recap: Simple slopes ```r library(reghelper) simple_slopes(cars_model, levels = list(hp = c(78, 147, 215))) ``` ``` ## disp hp Test Estimate Std. Error t value df Pr(>|t|) Sig. ## 1 sstest 78 -0.0507 0.0088 -5.7448 28 3.645e-06 *** ## 2 sstest 147 -0.0307 0.0064 -4.8207 28 4.528e-05 *** ## 3 sstest 215 -0.0110 0.0086 -1.2782 28 0.2117 ``` --- ### Recap: Plot simple slopes ```r library(sjPlot) plot_model(cars_model, type = "int", mdrt.values = "meansd") ``` <!-- --> --- ## Today Mixing categorical and continuous predictors Two categorical predictors Start discussing Factorial ANOVA --- ## Mixing categorical and continuous Consider the case where D is a variable representing two groups. In a univariate regression, how do we interpret the coefficient for D? `$$\hat{Y} = b_{0} + b_{1}D$$` -- `\(b_0\)` is the mean of the reference group, and D represents the difference in means between the two groups. --- ### Interpreting slopes Extending this to the multivariate case, where X is continuous and D is a dummy code representing two groups. `$$\hat{Y} = b_{0} + b_{1}D + b_2X$$` How do we interpret `\(b_1?\)` -- `\(b_1\)` is the difference in means between the two groups *if the two groups have the same average level of X* or holding X constant. This, by the way, is ANCOVA. --- ### Visualizing <!-- --> --- ### Visualizing <!-- --> --- ### Visualizing <!-- --> --- ### 3 or more groups We might be interested in the relative contributions of our two variables, but we have to remember that they're on different scales, so we cannot compare them using the unstandardized regression coefficient. Standardized coefficients can be used if we only have two groups, but what if we have 3 or more? -- Just like we use `\(R^2\)` to report how much variance in Y is explained by the model, we can break this down into the unique contributions of each variable in the model, including factors with 3+ levels. `$$\large \eta^2 = \frac{SS_{\text{Variable}}}{SS_{Y}}== \frac{SS_{\text{Variable}}}{SS_{\text{Total}}}$$` --- ```r mod = lm(Y ~ X + D, data = df) anova(mod) ``` ``` ## Analysis of Variance Table ## ## Response: Y ## Df Sum Sq Mean Sq F value Pr(>F) ## X 1 64.045 64.045 61.489 4.788e-07 *** ## D 1 20.071 20.071 19.270 0.0003998 *** ## Residuals 17 17.707 1.042 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ``` `$$\large \eta^2_{X} = \frac{64.045}{64.045+20.071+17.707} = .62899 = 63\%$$` `$$\large \eta^2_{D} = \frac{20.071}{64.045+20.071+17.707} = .19712 = 20\%$$` --- ## Interactions Now extend this example to include joint effects, not just additive effects: `$$\hat{Y} = b_{0} + b_{1}D + b_2X + b_3DX$$` How do we interpret `\(b_1?\)` -- `\(b_1\)` is the difference in means between the two groups *when X is 0*. What is the interpretation of `\(b_2\)`? -- `\(b_2\)` is the slope of X among the reference group. What is the interpretation of `\(b_3?\)` -- `\(b_3\)` is the difference in slopes between the reference group and the other group. --- ### Visualizing <!-- --> Where should we draw the segment to compare means? ??? Where you draw the segment changes the difference in means. That's why `\(b_1\)` can only be interpreted as the difference in means when X = 0. --- ## Example The University of Oregon is interested in understanding how undergraduates' academic performance and choice of major impacts their career success. They contact 150 alumni between the ages of 25 and 35 and collect their current salary (in thousands of dollars), their primary undergarduate major, and their GPA upon graduating. <!-- A recent study by [Craig, Nelson, & Dixson, 2019](https://journals.sagepub.com/doi/full/10.1177/0956797619834876) examined whether the presence or absence of a beard made it easier to decode a man's facial expression. In this study, participants were presented with photographs of bearded and clean-shaven men making expressive faces. --> <!--  --> <!-- Participants were asked to categorize each face as "happy" or "angry" as quickly as possible. Reaction time (in ms) was the outcome. --> <!-- I want to know whether men who are good at identifying angry expressions of clean-shaven men are also good at identifying angry expressions of bearded men. --> <!-- I also want to know if that relationship differs among men (participants) who are bearded. --> <!--  --> --- ### `R` code ```r library(psych) table(inc_data$major) ``` ``` ## ## Econ English Psych ## 50 50 50 ``` ```r describe(inc_data[,c("gpa", "income")], fast = T) ``` ``` ## vars n mean sd min max range se ## gpa 1 150 3.36 0.4 2.44 4.19 1.74 0.03 ## income 2 150 84.35 34.0 24.67 160.27 135.60 2.78 ``` --- ### Model summary ```r career.mod = lm(income ~ gpa*major, data = inc_data) summary(career.mod) ``` ``` ## ## Call: ## lm(formula = income ~ gpa * major, data = inc_data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -42.625 -11.869 0.376 9.301 40.942 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -59.181 22.902 -2.584 0.0108 * ## gpa 59.660 7.705 7.743 1.58e-12 *** ## majorEnglish -81.747 37.149 -2.201 0.0294 * ## majorPsych -175.314 35.462 -4.944 2.10e-06 *** ## gpa:majorEnglish -4.562 11.089 -0.411 0.6814 ## gpa:majorPsych 29.545 10.949 2.698 0.0078 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 14.91 on 144 degrees of freedom ## Multiple R-squared: 0.8142, Adjusted R-squared: 0.8077 ## F-statistic: 126.2 on 5 and 144 DF, p-value: < 2.2e-16 ``` --- ### Model summary: centering predictors ```r inc_data$gpa_c = inc_data$gpa - mean(inc_data$gpa) career.mod_c = lm(income ~ gpa_c*major, data = inc_data) summary(career.mod_c) ``` ``` ## ## Call: ## lm(formula = income ~ gpa_c * major, data = inc_data) ## ## Residuals: ## Min 1Q Median 3Q Max ## -42.625 -11.869 0.376 9.301 40.942 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 141.428 3.752 37.691 < 2e-16 *** ## gpa_c 59.660 7.705 7.743 1.58e-12 *** ## majorEnglish -97.086 4.907 -19.783 < 2e-16 *** ## majorPsych -75.965 4.384 -17.327 < 2e-16 *** ## gpa_c:majorEnglish -4.562 11.089 -0.411 0.6814 ## gpa_c:majorPsych 29.545 10.949 2.698 0.0078 ** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 14.91 on 144 degrees of freedom ## Multiple R-squared: 0.8142, Adjusted R-squared: 0.8077 ## F-statistic: 126.2 on 5 and 144 DF, p-value: < 2.2e-16 ``` --- ### Plotting results ```r library(sjPlot) plot_model(career.mod, type = "int", show.data = T, axis.title = c("Grade Point Average", "Income (in thousands of dollars)"), legend.title = "Undergraduate Major", title = "I'm in the wrong profession", wrap.title = T) ``` <!-- --> --- ## Two categorical predictors If both X and M are categorical variables, the interpretation of coefficients is no longer the value of means and slopes, but means and differences in means. Recall our Solomon's paradox example from a few weeks ago: ```r library(here) ``` ``` ## here() starts at /Users/sweston2/Google Drive/Work/Teaching/Courses/Graduate/Statistics and Methods Sequence/psy612 ``` ```r solomon = read.csv(here("data/solomon.csv")) ``` ```r head(solomon[,c("PERSPECTIVE", "DISTANCE", "WISDOM")]) ``` ``` ## PERSPECTIVE DISTANCE WISDOM ## 1 other immersed -0.27589395 ## 2 other distanced 0.42949213 ## 3 other distanced -0.02785874 ## 4 other distanced 0.53271500 ## 5 self distanced 0.62299793 ## 6 self distanced -1.99578129 ``` --- ### Model summary ```r solomon.mod = lm(WISDOM ~ PERSPECTIVE*DISTANCE, data = solomon) summary(solomon.mod) ``` ``` ## ## Call: ## lm(formula = WISDOM ~ PERSPECTIVE * DISTANCE, data = solomon) ## ## Residuals: ## Min 1Q Median 3Q Max ## -2.6809 -0.4209 0.0473 0.6694 2.3499 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) *## (Intercept) 0.3345 0.1878 1.781 0.0776 . ## PERSPECTIVEself -0.2124 0.2630 -0.808 0.4210 ## DISTANCEimmersed -0.1396 0.2490 -0.561 0.5760 ## PERSPECTIVEself:DISTANCEimmersed -0.5417 0.3526 -1.536 0.1273 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.9389 on 111 degrees of freedom ## (5 observations deleted due to missingness) ## Multiple R-squared: 0.1262, Adjusted R-squared: 0.1026 ## F-statistic: 5.343 on 3 and 111 DF, p-value: 0.001783 ``` --- ### Model summary ```r solomon.mod = lm(WISDOM ~ PERSPECTIVE*DISTANCE, data = solomon) summary(solomon.mod) ``` ``` ## ## Call: ## lm(formula = WISDOM ~ PERSPECTIVE * DISTANCE, data = solomon) ## ## Residuals: ## Min 1Q Median 3Q Max ## -2.6809 -0.4209 0.0473 0.6694 2.3499 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 0.3345 0.1878 1.781 0.0776 . *## PERSPECTIVEself -0.2124 0.2630 -0.808 0.4210 ## DISTANCEimmersed -0.1396 0.2490 -0.561 0.5760 ## PERSPECTIVEself:DISTANCEimmersed -0.5417 0.3526 -1.536 0.1273 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.9389 on 111 degrees of freedom ## (5 observations deleted due to missingness) ## Multiple R-squared: 0.1262, Adjusted R-squared: 0.1026 ## F-statistic: 5.343 on 3 and 111 DF, p-value: 0.001783 ``` --- ### Model summary ```r solomon.mod = lm(WISDOM ~ PERSPECTIVE*DISTANCE, data = solomon) summary(solomon.mod) ``` ``` ## ## Call: ## lm(formula = WISDOM ~ PERSPECTIVE * DISTANCE, data = solomon) ## ## Residuals: ## Min 1Q Median 3Q Max ## -2.6809 -0.4209 0.0473 0.6694 2.3499 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 0.3345 0.1878 1.781 0.0776 . ## PERSPECTIVEself -0.2124 0.2630 -0.808 0.4210 *## DISTANCEimmersed -0.1396 0.2490 -0.561 0.5760 ## PERSPECTIVEself:DISTANCEimmersed -0.5417 0.3526 -1.536 0.1273 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.9389 on 111 degrees of freedom ## (5 observations deleted due to missingness) ## Multiple R-squared: 0.1262, Adjusted R-squared: 0.1026 ## F-statistic: 5.343 on 3 and 111 DF, p-value: 0.001783 ``` --- ### Model summary ```r solomon.mod = lm(WISDOM ~ PERSPECTIVE*DISTANCE, data = solomon) summary(solomon.mod) ``` ``` ## ## Call: ## lm(formula = WISDOM ~ PERSPECTIVE * DISTANCE, data = solomon) ## ## Residuals: ## Min 1Q Median 3Q Max ## -2.6809 -0.4209 0.0473 0.6694 2.3499 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 0.3345 0.1878 1.781 0.0776 . ## PERSPECTIVEself -0.2124 0.2630 -0.808 0.4210 ## DISTANCEimmersed -0.1396 0.2490 -0.561 0.5760 *## PERSPECTIVEself:DISTANCEimmersed -0.5417 0.3526 -1.536 0.1273 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.9389 on 111 degrees of freedom ## (5 observations deleted due to missingness) ## Multiple R-squared: 0.1262, Adjusted R-squared: 0.1026 ## F-statistic: 5.343 on 3 and 111 DF, p-value: 0.001783 ``` --- ### Model summary ```r solomon.mod = lm(WISDOM ~ PERSPECTIVE*DISTANCE, data = solomon) summary(solomon.mod) ``` ``` ## ## Call: ## lm(formula = WISDOM ~ PERSPECTIVE * DISTANCE, data = solomon) ## ## Residuals: ## Min 1Q Median 3Q Max ## -2.6809 -0.4209 0.0473 0.6694 2.3499 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 0.3345 0.1878 1.781 0.0776 . ## PERSPECTIVEself -0.2124 0.2630 -0.808 0.4210 ## DISTANCEimmersed -0.1396 0.2490 -0.561 0.5760 ## PERSPECTIVEself:DISTANCEimmersed -0.5417 0.3526 -1.536 0.1273 ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 0.9389 on 111 degrees of freedom ## (5 observations deleted due to missingness) ## Multiple R-squared: 0.1262, Adjusted R-squared: 0.1026 *## F-statistic: 5.343 on 3 and 111 DF, p-value: 0.001783 ``` --- ### Plotting results ```r plot_model(solomon.mod, type = "int") ``` <!-- --> --- class:inverse ## Factorial ANOVA The interaction of two or more categorical variables in a general linear model is formally known as **Factorial ANOVA**. A factorial design is used when there is an interest in how two or more variables (or factors) affect the outcome. * Rather than conduct separate one-way ANOVAs for each factor, they are all included in one analysis. * The unique and important advantage to a factorial ANOVA over separate one-way ANOVAs is the ability to examine interactions. --- .pull-left[ The example data are from a simulated study in which 180 participants performed an eye-hand coordination task in which they were required to keep a mouse pointer on a red dot that moved in a circular motion. ] .pull-right[  ] The outcome was the time of the 10th failure. The experiment used a completely crossed, 3 x 3 factorial design. One factor was dot speed: .5, 1, or 1.5 revolutions per second. The second factor was noise condition. Some participants performed the task without any noise; others were subjected to periodic and unpredictable 3-second bursts of 85 dB white noise played over earphones. Of those subjected to noise, half could do nothing to stop the noise (uncontrollable noise); half believed they could stop the noise by pressing a button (controllable noise). --- ### Terminology In a **completely crossed** factorial design, each level of one factor occurs in combination with each level of the other factor. If equal numbers of participants occur in each combination, the design is **balanced**. This has some distinct advantages (described later). | | Slow | Medium | Fast | |:-|:-:|:-:|:-:| | No Noise | X | X | X | | Controllable Noise | X | X | X | | Uncontrollable Noise | X | X | X | --- ### Terminology We describe the factorial ANOVA design by the number of **levels** of each **factor.** - Factor: a variable that is being manipulated or in which there are two or more groups - Level: the different groups within a factor In this case, we have a 3 x 3 ANOVA ("three by three"), because our first factor (speed) has three levels (slow, medium, and fast) and our second factor (noise) also has three levels (none, controllable, and uncontrollable) --- ### Questions <table class="table" style="margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Noise </th> <th style="text-align:right;"> Slow </th> <th style="text-align:right;"> Medium </th> <th style="text-align:right;"> Fast </th> <th style="text-align:right;"> Marginal </th> </tr> </thead> <tbody> <tr grouplength="3"><td colspan="5" style="border-bottom: 1px solid;"><strong></strong></td></tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> None </td> <td style="text-align:right;"> 630.72 </td> <td style="text-align:right;"> 525.29 </td> <td style="text-align:right;"> 329.28 </td> <td style="text-align:right;"> 495.10 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Controllable </td> <td style="text-align:right;"> 576.67 </td> <td style="text-align:right;"> 492.72 </td> <td style="text-align:right;"> 287.23 </td> <td style="text-align:right;"> 452.21 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Uncontrollable </td> <td style="text-align:right;"> 594.44 </td> <td style="text-align:right;"> 304.62 </td> <td style="text-align:right;"> 268.16 </td> <td style="text-align:right;"> 389.08 </td> </tr> <tr> <td style="text-align:left;"> Marginal </td> <td style="text-align:right;"> 600.61 </td> <td style="text-align:right;"> 440.88 </td> <td style="text-align:right;"> 294.89 </td> <td style="text-align:right;"> 445.46 </td> </tr> </tbody> </table> There are three important ways we can view the results of this experiment. Two of them correspond to questions that would arise in a simple one-way ANOVA: Regardless of noise condition, does speed of the moving dot affect performance? Regardless of dot speed, does noise condition affect performance? --- ### Marginal means <table class="table" style="margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Noise </th> <th style="text-align:right;"> Slow </th> <th style="text-align:right;"> Medium </th> <th style="text-align:right;"> Fast </th> <th style="text-align:right;"> Marginal </th> </tr> </thead> <tbody> <tr grouplength="3"><td colspan="5" style="border-bottom: 1px solid;"><strong></strong></td></tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> None </td> <td style="text-align:right;"> 630.72 </td> <td style="text-align:right;"> 525.29 </td> <td style="text-align:right;"> 329.28 </td> <td style="text-align:right;"> 495.10 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Controllable </td> <td style="text-align:right;"> 576.67 </td> <td style="text-align:right;"> 492.72 </td> <td style="text-align:right;"> 287.23 </td> <td style="text-align:right;"> 452.21 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Uncontrollable </td> <td style="text-align:right;"> 594.44 </td> <td style="text-align:right;"> 304.62 </td> <td style="text-align:right;"> 268.16 </td> <td style="text-align:right;"> 389.08 </td> </tr> <tr> <td style="text-align:left;font-weight: bold;color: white !important;background-color: #562457 !important;"> Marginal </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 600.61 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 440.88 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 294.89 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 445.46 </td> </tr> </tbody> </table> We can answer those questions by examining the marginal means, which isolate one factor while collapsing across the other factor. Regardless of noise condition, does speed of the moving dot affect performance? Faster moving dots are harder to track and lead to faster average failure times. Adding information about variability allows us a sense of whether these are significant and meaningful differences... --- ```r library(ggpubr) ggbarplot(data = Data, x = "Speed", y = "Time", add = c("mean_ci"), fill = "#562457", xlab = "Speed Condition", ylab = "Mean Seconds (95% CI)", title = "Failure time as a function of\nspeed condition") + cowplot::theme_cowplot(font_size = 20) ``` <!-- --> Looks like the mean differences are substantial. The ANOVA will be able to tell us if the means are significantly different and the magnitude of those differences in terms of variance accounted for. --- ### Marginal means <table class="table" style="margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Noise </th> <th style="text-align:right;"> Slow </th> <th style="text-align:right;"> Medium </th> <th style="text-align:right;"> Fast </th> <th style="text-align:right;"> Marginal </th> </tr> </thead> <tbody> <tr grouplength="3"><td colspan="5" style="border-bottom: 1px solid;"><strong></strong></td></tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> None </td> <td style="text-align:right;"> 630.72 </td> <td style="text-align:right;"> 525.29 </td> <td style="text-align:right;"> 329.28 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 495.10 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Controllable </td> <td style="text-align:right;"> 576.67 </td> <td style="text-align:right;"> 492.72 </td> <td style="text-align:right;"> 287.23 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 452.21 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Uncontrollable </td> <td style="text-align:right;"> 594.44 </td> <td style="text-align:right;"> 304.62 </td> <td style="text-align:right;"> 268.16 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 389.08 </td> </tr> <tr> <td style="text-align:left;"> Marginal </td> <td style="text-align:right;"> 600.61 </td> <td style="text-align:right;"> 440.88 </td> <td style="text-align:right;"> 294.89 </td> <td style="text-align:right;font-weight: bold;color: white !important;background-color: #562457 !important;"> 445.46 </td> </tr> </tbody> </table> Regardless of dot speed, does noise condition affect performance? Performance declines in the presence of noise, especially if the noise is uncontrollable. Here, too adding information about variability allows us a sense of whether these are significant and meaningful differences... --- ```r ggbarplot(data = Data, x = "Noise", y = "Time", add = c("mean_ci"), fill = "#562457", xlab = "Noise Condition", ylab = "Mean Seconds (95% CI)", title = "Failure time as a function of\nnoise condition") + cowplot::theme_cowplot(font_size = 20) ``` <!-- --> The mean differences are not as apparent for this factor. The ANOVA will be particularly important for informing us about statistical significance and effect size. --- ### Marginal means <table class="table" style="margin-left: auto; margin-right: auto;"> <thead> <tr> <th style="text-align:left;"> Noise </th> <th style="text-align:right;"> Slow </th> <th style="text-align:right;"> Medium </th> <th style="text-align:right;"> Fast </th> <th style="text-align:right;"> Marginal </th> </tr> </thead> <tbody> <tr grouplength="3"><td colspan="5" style="border-bottom: 1px solid;"><strong></strong></td></tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> None </td> <td style="text-align:right;"> 630.72 </td> <td style="text-align:right;"> 525.29 </td> <td style="text-align:right;"> 329.28 </td> <td style="text-align:right;"> 495.10 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Controllable </td> <td style="text-align:right;"> 576.67 </td> <td style="text-align:right;"> 492.72 </td> <td style="text-align:right;"> 287.23 </td> <td style="text-align:right;"> 452.21 </td> </tr> <tr> <td style="text-align:left; padding-left: 2em;" indentlevel="1"> Uncontrollable </td> <td style="text-align:right;"> 594.44 </td> <td style="text-align:right;"> 304.62 </td> <td style="text-align:right;"> 268.16 </td> <td style="text-align:right;"> 389.08 </td> </tr> <tr> <td style="text-align:left;"> Marginal </td> <td style="text-align:right;"> 600.61 </td> <td style="text-align:right;"> 440.88 </td> <td style="text-align:right;"> 294.89 </td> <td style="text-align:right;"> 445.46 </td> </tr> </tbody> </table> The **marginal mean differences** correspond to main effects. They tell us what impact a particular factor has, ignoring the impact of the other factor. The remaining effect in a factorial design, and it primary advantage over separate one-way ANOVAs, is the ability to examine **conditional mean differences**. --- ### One-way vs Factorial .pull-left[ **Marginal Mean Differences** Results of one-way ANOVA ```r lm(y ~ GROUP) ``` `$$\hat{Y} = b_0 + b_1D$$` ] .pull-left[ **Conditional Mean Differences** Results of Factorial ANOVA ```r lm(y ~ GROUP*other_VARIABLE) ``` `$$\hat{Y} = b_0 + b_1D + b_2O + b_3DO$$` ] --- class: inverse ## Next time More Factorial ANOVA